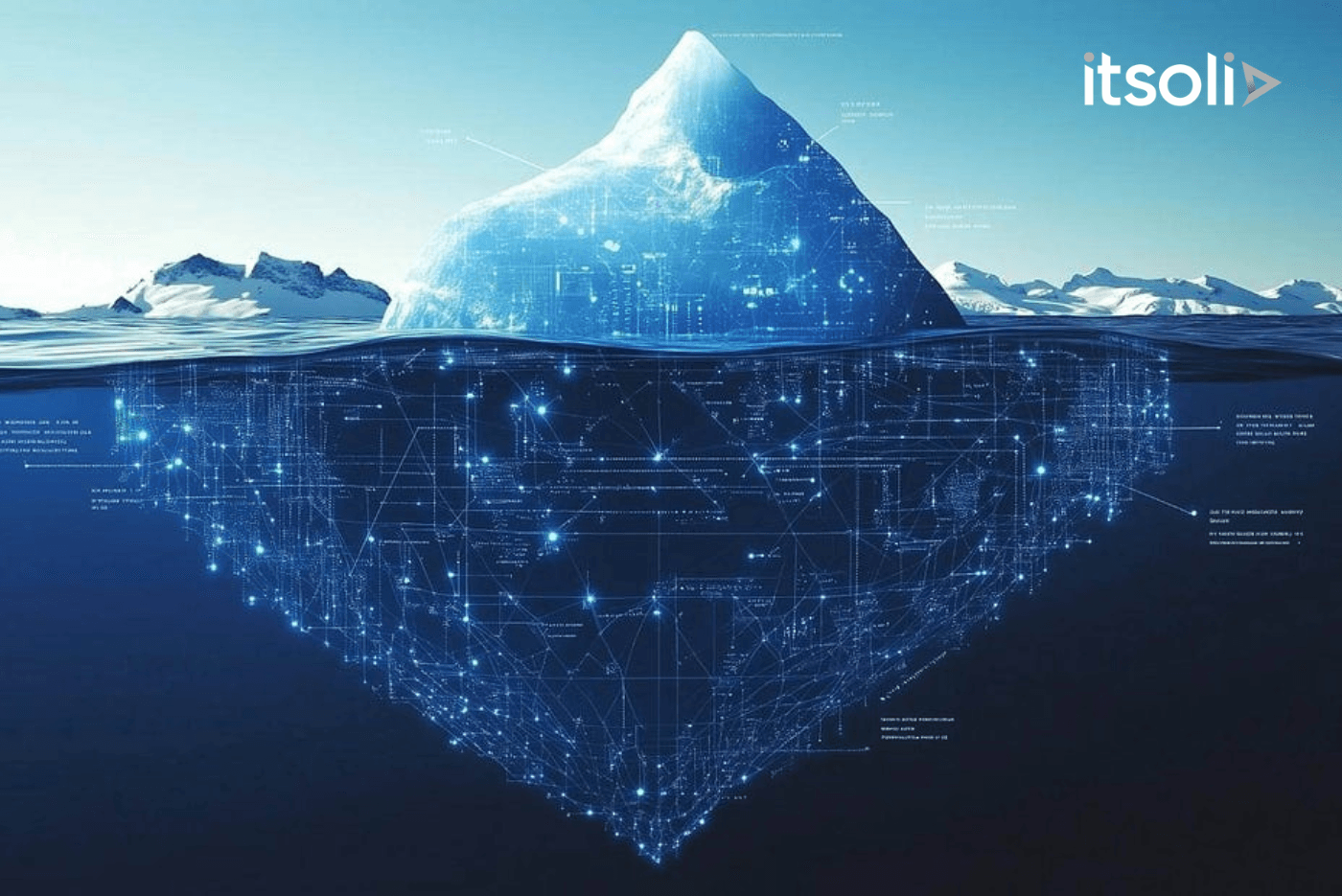

The Integration Iceberg: Why Custom AI Fails Without System Context

August 27, 2025

Models May Be Smart, but Enterprises Are Complex

You have trained a custom AI model. It works on sample data. It passes tests. It even outperforms your baseline. But once you push it live—nothing changes. Business users still rely on spreadsheets. Analysts revert to manual tagging. And operations remain stuck in old patterns.

What went wrong?

Most AI failures in the enterprise have nothing to do with the model’s accuracy. They stem from a far deeper, more invisible challenge: system context. You did not integrate the model into the messy, interconnected, legacy-heavy reality that defines how your enterprise runs.

You saw the tip of the iceberg. But the bulk of AI’s operational value lies underneath—in the parts most companies ignore.

AI Integration Is More Than Just a Data Pipeline

Too many companies treat AI integration like ETL (extract, transform, load). Pull data, train model, pipe output. But integrating AI into an enterprise means wiring it into:

- Systems of record

- Systems of action

- Systems of accountability

Let us unpack that.

- Systems of record – Where the data originates. CRMs, ERPs, ticketing platforms, custom SQL warehouses. AI must understand the structure, quirks, and lineage of this data.

- Systems of action – Where decisions get implemented. Procurement systems, customer service platforms, inventory controls. AI must trigger change here—not just send an alert.

- Systems of accountability – Where outcomes are tracked. Auditing dashboards, compliance logs, performance KPIs. AI must close the loop here to measure impact and trustworthiness.

When the model sits outside these systems—say, in a Jupyter notebook or a dashboard—it cannot influence the business. It becomes analysis, not action.

The Real Cost of Ignoring System Context

A major telco deployed a churn prediction model. It could forecast customer attrition with 87 percent accuracy. But retention improved by only 2 percent. Why?

- The customer support agents never saw the AI score.

- Their CRM required manual entry to trigger an outreach.

- No incentive existed to act on AI recommendations.

- There was no logging to validate whether intervention worked.

The model knew who was going to churn. But the system context was not built to act on that knowledge.

The cost? Months of wasted work, lower ROI, and a more skeptical executive team.

Now multiply that across use cases—fraud, forecasting, recommendation, routing—and the pattern becomes clear: system blindness kills AI adoption.

Mapping the Integration Iceberg

To avoid the trap, enterprises need to map the full stack of integration touchpoints—not just where the model sits. Here is a simplified breakdown:

| Layer | What It Includes | AI Impact |

|---|---|---|

| Application Layer | CRMs, ERPs, ticketing systems | Where AI outputs are used or ignored |

| Process Layer | SOPs, SLAs, workflows | Defines how AI-triggered actions happen |

| Data Layer | Warehouses, lakes, APIs | Feeds the model and stores results |

| Governance Layer | Audit logs, role access, policies | Enables explainability and compliance |

| Communication Layer | Dashboards, alerts, APIs | Shows insights to the right users at the right time |

Integration is not just technical. It is organizational. Each of these layers has owners, politics, legacy constraints, and KPIs. Ignoring them creates friction—or worse, total rejection.

What Good Integration Looks Like

Let us take an example from a logistics company.

They deploy a routing optimization model to reduce delivery times.

Old Workflow:

- Orders come in via e-commerce platform

- Dispatch team manually plans routes

- Drivers use printed sheets

AI-Integrated Workflow:

- Orders flow into a unified order management system

- The AI model suggests optimized routes

- Routes auto-sync to a driver app via API

- Dispatch sees override options if constraints (e.g. weather) arise

- Delivery times are tracked and fed back into the model

What changed?

- Data systems were connected

- Workflows were automated end-to-end

- Human intervention was designed, not reactive

- KPIs shifted from “number of deliveries” to “on-time delivery rate”

This is what real AI integration looks like. It is not about deploying a model. It is about redesigning the system context in which decisions happen.

Why Custom AI Fails Harder

Pre-built tools come with assumptions. But with custom AI, the assumption is that you will tailor everything. That includes:

- Data prep

- Model training

- Output formatting

- Action triggers

- Feedback loops

If you do not build the end-to-end flow, your model becomes a silo. Worse, it creates a new layer of technical debt: models that work in theory but have no real-world handle.

Custom AI gives you power. But without integration discipline, that power gets stranded.

A Framework for Context-Driven Integration

Here is a practical approach to build AI solutions that survive the production cliff:

1. Map Systems and Owners

List all upstream and downstream systems that touch your model. Who owns them? What constraints exist? What is the current level of automation?

2. Align Model Outputs to Action Paths

Does your AI produce outputs that actually trigger workflows? Can it write to the right fields? Can it send an API call or webhook to a connected system?

3. Create Layered Feedback Loops

You need validation at multiple levels:

- Model accuracy metrics

- User adoption tracking

- Business outcome tracking

Build each into the deployment roadmap.

4. Pilot Inside Real Context

Do not test in sandbox environments only. Run limited pilots in live systems. Watch how users react. Trace the AI’s impact from decision to outcome.

5. Treat Integration as a Product, Not a Task

Dedicate resources. Build user stories. Monitor adoption. Integration is not a checkbox—it is a capability.

The Payoff: Trust, Speed, and Scale

When AI is integrated into system context, everything gets faster:

- Decisions are automated at the edge

- Teams trust AI because they see how it fits into their tools

- Leadership sees measurable outcomes, not abstract improvements

- Feedback loops make AI self-improving instead of static

Integration is the multiplier. It turns intelligence into impact.

Ignore it, and you are just building a model museum.

© 2026 ITSoli